Active Projects

Real-Time Navigation System for Cardiac Interventions

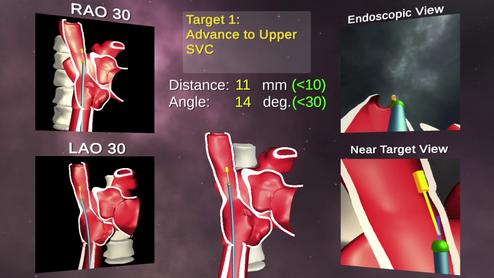

The overall goal of this project is to develop a mixed reality (MR) and deep learning-based system for intra-operative image-guidance for interventional procedures conducted under fluoroscopy. This unique guidance system will offer both a quantitative and intuitive method for navigating and visualizing percutaneous interventions, by rendering (via MR headset) a true 3-dimensional (3D) representation of the relative position of a catheter inside a patient’s body using novel deep learning (DL) algorithms for real-time segmentation of fluoroscopy images and co-registration with 3D imaging data, such as cone-beam computed tomography (CT), CT, or magnetic resonance imaging (MRI).

Percutaneous and minimally invasive procedures are used for a wide variety of cardiovascular ailments, and are typically guided by imaging modalities, such as x-ray fluoroscopy and echocardiography, since they provide real-time imaging. However, most organs are transparent to fluoroscopy, so contrast agents, which transiently opacify structures of interest, must be used to visualize the surrounding tissue. Furthermore, fluoroscopy only provides a 2-dimensional (2D) projection of the catheter and device, and therefore no information on depth. Echocardiography can directly image anatomic structures and blood flow, so is often used as a complementary imaging modality. Although this method can provide useful intra-operative 2D and 3D images, this technology requires skilled operators, and general anesthesia is a pre-requisite for trans-esophageal echocardiographic guidance. The limitations of these techniques increase the complexity of procedures, which often require the interventionalist to determine the position of the catheter/device by analyzing multiple imaging angles and modalities. The added co-ordination of different specialties also increases resource utilization and costs.

Pre-operative 3D imaging modalities provide detailed anatomic information and are often displayed on separate screens or overlaid on real-time imaging modalities to improve image-guided interventions. However, this method of fusion imaging obstructs the view of the real-time image during the procedure. Furthermore, all of these images are displayed on 2D screens, which fundamentally mitigate the ability to perceive depth and orientation.

Our lab is focused on developing solutions to the hurdles for creating this system, which includes real-time catheter tracking, co-registration, and motion-compensation. The primary components of this system have been demonstrated by our lab on a patient-specific 3D printed model for training of a transseptal puncture (Video 1). However, clinical use of this system requires that we utilize more advanced deep learning-based architectures so more accurate and rapid processing occurs. We plan to optimize our developed software and hardware system to be incorporated into a clinical workflow and validate the system in a IRB-approved pilot study, which will compare the performance of cardiac fellows conducting a mock-procedure on a 3D printed heart phantom using our guidance system versus standard imaging.

Percutaneous and minimally invasive procedures are used for a wide variety of cardiovascular ailments, and are typically guided by imaging modalities, such as x-ray fluoroscopy and echocardiography, since they provide real-time imaging. However, most organs are transparent to fluoroscopy, so contrast agents, which transiently opacify structures of interest, must be used to visualize the surrounding tissue. Furthermore, fluoroscopy only provides a 2-dimensional (2D) projection of the catheter and device, and therefore no information on depth. Echocardiography can directly image anatomic structures and blood flow, so is often used as a complementary imaging modality. Although this method can provide useful intra-operative 2D and 3D images, this technology requires skilled operators, and general anesthesia is a pre-requisite for trans-esophageal echocardiographic guidance. The limitations of these techniques increase the complexity of procedures, which often require the interventionalist to determine the position of the catheter/device by analyzing multiple imaging angles and modalities. The added co-ordination of different specialties also increases resource utilization and costs.

Pre-operative 3D imaging modalities provide detailed anatomic information and are often displayed on separate screens or overlaid on real-time imaging modalities to improve image-guided interventions. However, this method of fusion imaging obstructs the view of the real-time image during the procedure. Furthermore, all of these images are displayed on 2D screens, which fundamentally mitigate the ability to perceive depth and orientation.

Our lab is focused on developing solutions to the hurdles for creating this system, which includes real-time catheter tracking, co-registration, and motion-compensation. The primary components of this system have been demonstrated by our lab on a patient-specific 3D printed model for training of a transseptal puncture (Video 1). However, clinical use of this system requires that we utilize more advanced deep learning-based architectures so more accurate and rapid processing occurs. We plan to optimize our developed software and hardware system to be incorporated into a clinical workflow and validate the system in a IRB-approved pilot study, which will compare the performance of cardiac fellows conducting a mock-procedure on a 3D printed heart phantom using our guidance system versus standard imaging.

Video 1: Demo captured during a training session for the positioning of a catheter to perform a transeptal puncture on a 3D printed heart. The catheter is tracked using EM sensors and co-registered to a CAD model that are both rendered in real-time in mixed realty. Quantitative feedback is provided as multiple views are shown of both the current catheter’s position and the target for that procedural step.

Mixed Reality for Patient Counseling Surgical Guidance for the Treatment of Uterine Fibroids

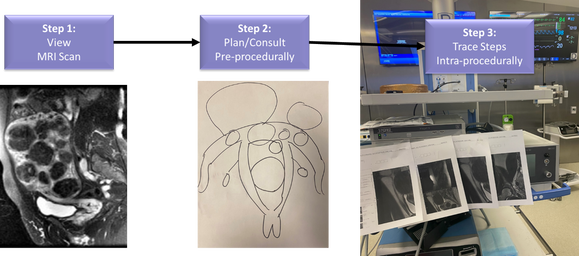

Uterine fibroids represent the highest prevalence of benign tumors in women, with a significant bias towards African American women. Currently, doctors counsel women using flat MRI images, but fibroids can grow in multiple uterine planes and 2D imaging does not accurately allow for optimized planning and intraoperative guidance.

Our technology brings MRI into the modern age by leveraging deep learning algorithms to generate 3D renderings that a physician can interact with before a procedure to improve diagnosis and path planning, as well as during the procedure to optimize adherence to that plan for less complications and faster procedural durations.

We currently have an IRB to perform a clinical trial to assess the usefulness of this technology for both patient counseling and minimally invasive surgery. Our goal is to characterize the improved benefits offered by 3D visualization on both 2D screens and Mixed Reality headsets, as compared to the standard MRI viewers.

Our technology brings MRI into the modern age by leveraging deep learning algorithms to generate 3D renderings that a physician can interact with before a procedure to improve diagnosis and path planning, as well as during the procedure to optimize adherence to that plan for less complications and faster procedural durations.

We currently have an IRB to perform a clinical trial to assess the usefulness of this technology for both patient counseling and minimally invasive surgery. Our goal is to characterize the improved benefits offered by 3D visualization on both 2D screens and Mixed Reality headsets, as compared to the standard MRI viewers.

Figure 1. Overall workflow of advanced imaging for patient care. Step 1: MRI scan is acquired pre-procedurally and interpreted by a radiologist from 2D scans. Step 2: Patients are counseled using paper drawings or 2D slices of the MRI. Step 3: Intra-procedural guidance is performed by either memorizing the positions of the fibroids, displaying them on 2D screens, or printing slices on printouts.

Figure 2. Next-generation workflow using mixed reality and deep learning. Step 1: MRI scan is acquired pre-procedurally and automatically segmented by a deep learning model. Radiologists can confirm scans by viewing 3D views embedded on the 2D slices. Step 2: Patients are counseled using a 3D rendering of the segmented fibroids and anatomy. Step 3: Intra-procedural guidance is performed using a voice-command enabled interactive mixed reality experience for visualization and tracking of fibroid extraction.

Soft Robotic Mapping Catheter for Atrial Fibrillation

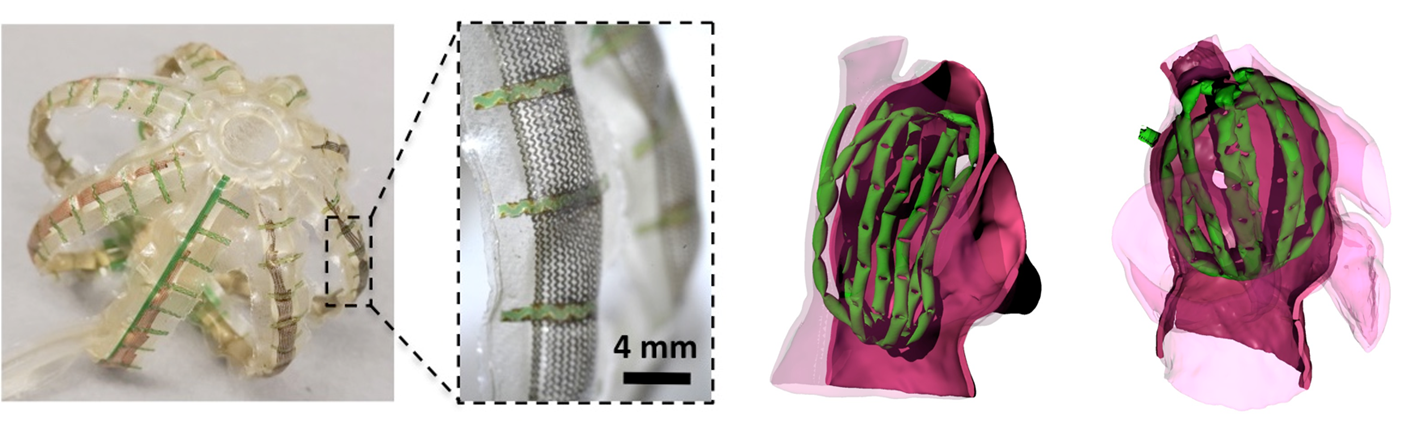

This project aims to develop and test a highly novel multi-electrode balloon catheter for acquiring whole chamber cardiac electrograms for atrial fibrillation (AF) with unprecedented fidelity and speed. In the large number of cases where pharmaceuticals cannot manage AF or generate severe side effects, minimally invasive electrodes are used to map electrical signals and ablate tissue thought to be the source of aberrant electrical pathways. While existing ablation protocols can treat the disease in many cases, these procedures can be lengthy (typically 2-4 hours) and are only somewhat effective (~60-70% success). These inefficiencies can be attributed in large part to our poor understanding of the mechanisms of AF. Complex spatio-temporal electrophysiological features, such as “rotors,” are known to play a key role in the disease, but typically cannot be detected by conventional mapping systems because their serial nature prevents the measurements from being spatiotemporally resolved. New classes of multi-electrode catheters (known as basket catheters) have recently been developed to simultaneously map the entire atrium. Here, an array of sensors are mounted on a spherical cage of stiff nitinol splines. While this device is promising, the rigid mechanical properties of the sensor and the frame they are mounted on yield poor conformability (~60% contact), diminishing performance. Additionally, sensor position is not well controlled; many sensors group in crevices in the atrial anatomy. As a result, atrial maps often omit large regions of the chamber.

To address these challenges, Dr. Simon Dunham and Dr. Mosadegh have developed a cardiac mapping device consisting of collections of active, hydraulically actuated, soft-robotic actuators integrated with arrays of flexible electronic sensors that can perform mapping of the entire left atrium (Fig. 1). We named this device the soft robotic sensor array (SRSA). This system, once actuated (ie, pressurized), forces the electrode array into contact with the cardiac tissue without occluding blood flow. Since the soft actuators are constructed from compliant polyurethanes, they will bend and stretch to adapt to the curvature and unique anatomy of the patient. Furthermore, these configurations are well suited for adapting to complex atrial anatomies given their high level of compliance and ability to undergo large deformations. This device leverages the advantages of soft robotic actuators and flexible electronic sensor arrays, both of which interface safely with tissue and conform to complex anatomy. Our central hypothesis is that these SRSAs, which utilize principles of soft, conformable sensing, will provide rapid and effective electrical mapping of electrograms from the entire patient atria.

To address these challenges, Dr. Simon Dunham and Dr. Mosadegh have developed a cardiac mapping device consisting of collections of active, hydraulically actuated, soft-robotic actuators integrated with arrays of flexible electronic sensors that can perform mapping of the entire left atrium (Fig. 1). We named this device the soft robotic sensor array (SRSA). This system, once actuated (ie, pressurized), forces the electrode array into contact with the cardiac tissue without occluding blood flow. Since the soft actuators are constructed from compliant polyurethanes, they will bend and stretch to adapt to the curvature and unique anatomy of the patient. Furthermore, these configurations are well suited for adapting to complex atrial anatomies given their high level of compliance and ability to undergo large deformations. This device leverages the advantages of soft robotic actuators and flexible electronic sensor arrays, both of which interface safely with tissue and conform to complex anatomy. Our central hypothesis is that these SRSAs, which utilize principles of soft, conformable sensing, will provide rapid and effective electrical mapping of electrograms from the entire patient atria.

Enabling Technologies

Soft Robotics

Soft robots use the non-linear properties of elastomers to perform sophisticated tasks that would otherwise be impossible or very complex and expensive to do with traditional hard robotic components. The use of these “smart” materials allows the fabrication of robotic systems with fewer auxiliary sensors and feedback loops. One class of soft robotic actuators are elastomeric structures powered by pressurized fluids. These soft fluid-actuators are of particular interest for biomedical applications because they are lightweight, distribute forces easily, inexpensive, easily fabricated, and can provide non-linear motion with simple inputs. We believe soft robotics will have a significant impact in surgical tools, implantable devices, and rehabilitation therapy.

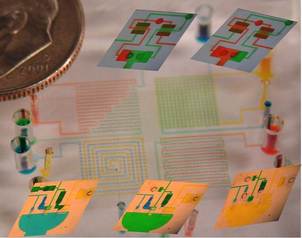

Microfluidics

Microfluidics is a technology that enables precise spatial and temporal control over small volumes of fluids, and displays unique behaviors not seen in macro-scale systems. Self-contained microfluidic devices (i.e., lab-on-a-chip technology) allow for sophisticated processing of biological fluids for diagnostic and therapeutic applications. Our lab aims to develop novel microfluidic devices with embedded fluidic controls that enable true lab-on-a-chip devices by eliminating the need for off-chip control systems. These devices will utilize a novel design concept, referred to as integrated microfluidic circuits (IMC), which are networks of elastomeric valves that provide self-control of the timing of fluidic switching within the device, reminiscent of integrated circuits for electronic devices. The IMC technology will be useful for many applications of microfluidics, including combinatorial chemistry, point-of-care diagnostics, organs-on-chip cell culture, and other biomedical applications. In addition, this technology is applicable to other fields using fluidic actuation, such as soft robotics, medical/surgical tools, and energy harvesting systems.

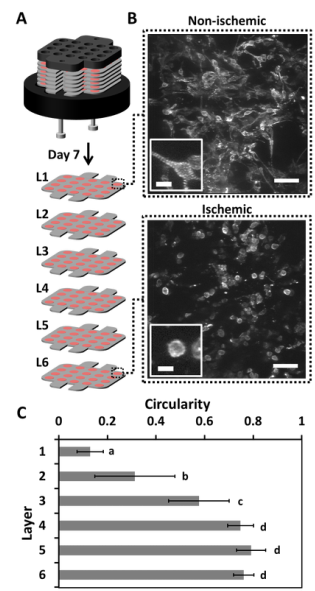

3D Cell Culture

Our lab aims to develop novel 3D culture systems that recapitulate cues from the microenvironment of tissues, in a form factor that allows for easy analysis and control of the system parameters. Our lab will use both microfluidic technologies and a novel multi-layer stacking system to achieve these goals. The multi-layered stacking system is a paper-based technology, which utilizes the inherent properties of paper—wicking of liquids and stacking of sheets. The wicking property allows hydrogel solutions containing cells to be positioned within the thickness (~40-200 µm) of a sheet of paper. The stackability of paper allows layer-by-layer construction of thick constructs at the resolution of the thickness of each layer. The cells within these constructs are able to communicate, migrate, and remodel the ECM in a manner that captures the 3D environment of tissues in the body. Furthermore, the construct can be separated back into its individual layers, allowing for easy isolation and analysis of living subpopulations of cells; typical procedures for analysis of thick tissues require complicated procedures that include killing the cells for analysis. This system is particularly useful for creating nutrient-specific (i.e., oxygen and glucose) gradients, to mimic pathological conditions such as ischemia in tumors and cardiac tissue. Additionally, this system allows easy isolation of cells within particular regions of the stack; a capability that enables the use of cells in these systems for cell therapies and diagnostics (e.g., cancer stem cells, directing stem cell differentiation, and screening for pharmaceutical drugs).